- About

- Programs

- Issues

- Academic Freedom

- Political Attacks on Higher Education

- Resources on Collective Bargaining

- Shared Governance

- Campus Protests

- Faculty Compensation

- Racial Justice

- Diversity in Higher Ed

- Financial Crisis

- Privatization and OPMs

- Contingent Faculty Positions

- Tenure

- Workplace Issues

- Gender and Sexuality in Higher Ed

- Targeted Harassment

- Intellectual Property & Copyright

- Civility

- The Family and Medical Leave Act

- Pregnancy in the Academy

- Publications

- Data

- News

- Membership

- Chapters

Understanding Disciplinary Differences in Peer Review

Findings from a new study can inform debates about peer review.

The peer-review process that serves as the main gatekeeper for scholarly journals is supposed to ensure that published manuscripts meet the standards of the discipline and are of sufficient quality and importance to warrant publication. The acquisition of new knowledge and the development of new theories, methods, and interpretations in the sciences, social sciences, and humanities are at the heart of the academic enterprise. For scholarly journals, the peer-review process is of utmost importance because of its impact on decisions about which scholarly works are worthy of publication and dissemination. Moreover, a faculty member’s record of publication, a direct result of the peer-review process, is part of the assessment used for career milestones such as tenure and promotion, appointment to editorial boards, and nomination for leadership positions.

Amid debates about various aspects of peer review, one important question has been largely overlooked: Are there patterns in the type of peer review used by scholarly journals across broad disciplinary categories and even specific subjects or disciplines? My study of more than 4,500 journals—which focused specifically on the use of single-blind and double-blind peer review—found that patterned variations clearly exist.

Why should one be knowledgeable about variations by discipline? Academics are aware of the peer-review process used by the journals to which they submit manuscripts. In turn, they may assume that the manuscripts of scholars in other disciplines are evaluated using that same process. The lack of awareness of potential differences in the normative peer-review process by discipline may lead to unintentional and even undetectable misunderstandings in informal conversations, in discussion of tenure and promotion cases by campus-wide decision-making bodies, and in larger debates about the peer-review process. Since peer review is of central importance to the academic enterprise, disciplinary variations and the misunderstandings they may cause warrant closer attention.

The Study

In 2020, I undertook a large empirical study of the type of peer review used by scholarly journals in broad disciplinary categories as well as more specific subjects or disciplines. Although peer review is also used to assess grant and fellowship applications as well as manuscripts submitted to book publishers, I focused on peer review used by scholarly journals from three of the top publishers because of the large number of journals and the range of disciplines represented. I analyzed a sample consisting of all 4,754 scholarly journals published by Elsevier (N=2,178), Wiley (N=1,569), and SAGE (N=1,007) that use either single-blind or double-blind peer review. In single-blind review, reviewers’ identities are blinded—that is, not known to authors—though authors’ identities are revealed to reviewers at the time of review. In double-blind review, both reviewers’ and authors’ identities are blinded—that is, not revealed to one another at the time of review. Thus, the difference between single- and double-blind peer review lies only in whether authors’ identities are revealed to reviewers. In my study, I focused on single- and double-blind review because less than 2 percent of these publishers’ peer-reviewed journals use other types of review (such as triple-blind review and open review, which are described later).

I found information about the type of peer review currently used by each journal on the website of either the publisher or the journal. Each publisher classifies journals into four categories referred to in this study as broad disciplinary categories: materials science/physical science and engineering, life sciences/life and biomedical sciences, health/health sciences, and social sciences and humanities. I use the term specific subjects to refer to publishers’ more detailed classifications of disciplines. SAGE classifies journals into seventy-four specific subjects, Wiley classifies journals into seventeen specific subjects and more than five hundred subclassifications, and Elsevier classifies journals into twenty-four specific subjects and more than two hundred subclassifications. I based my classifications of journals entirely on the publisher’s listings. Since the disciplines that publishers included in the four broad disciplinary categories as well as in the more specific subjects were not identical across the three publishers, I created a separate data set and separately report the results for each of the three publishers. Even so, readers will notice that across the three publishers the four broad disciplinary categories are similar enough in name to draw conclusions about patterns regarding the type of peer review.

Key Findings

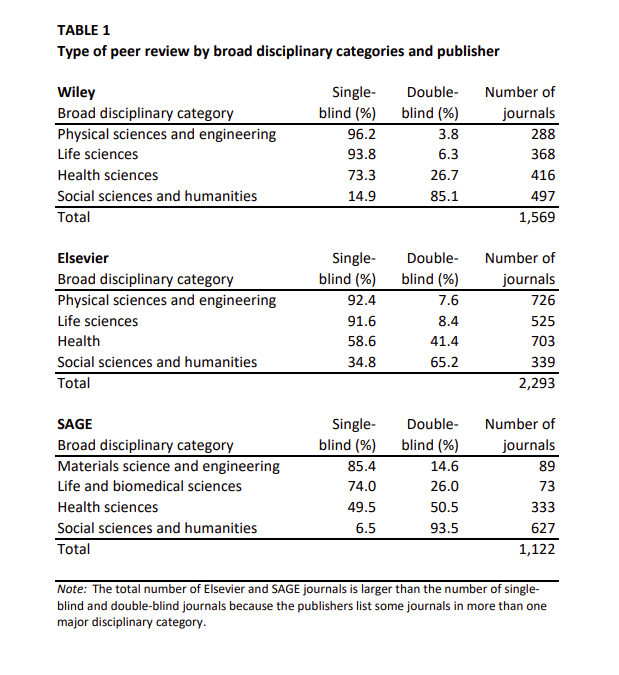

Table 1 presents the four broad disciplinary categories arranged in descending order based on the percentage of journals that use single-blind review.

For all three publishers, the four broad disciplinary categories appear in the same order. Materials science/physical sciences and engineering ranks first on the list of categories, with more than 85 percent of journals using single-blind peer review. Life sciences/life and biomedical sciences ranks second for all three publishers, with the percentage of journals using single-blind review often close to that for materials science/physical sciences and engineering. In addition, for all three publishers, the percentage of journals in health/health sciences that use single-blind review is notably less than that of the other two science-oriented broad disciplinary categories. In stark contrast, journals in the social sciences and humanities category are by far the most likely to use double-blind review for all three publishers. For example, more than 85 percent of SAGE and Wiley journals in this category use double-blind review. The variation in the percentage of journals using single- and double-blind review in similarly named categories across the three publishers is likely a consequence of differences in the disciplinary mix of journals within the categories across publishers. Nevertheless, for all three publishers, there is a clear pattern:

- Journals in the materials science/physical sciences and engineering category as well as in the life sciences/life and biomedical sciences category are more likely to use single-blind review.

- Journals in the social sciences and humanities category are more likely to use double-blind review.

- Journals in the health/health sciences category fall somewhere in between.

Tables 2–4 display the percentage of journals that use single- or double-blind peer review by more specific subjects, though each publisher used a somewhat different system of classification. In each table, the specific subjects are arranged in descending order based on the percentage of journals that use single-blind peer review. Although some key findings are presented here, I would encourage curious readers to further peruse these more detailed tables.

Table 2 displays the results for SAGE journals in fifty-three specific subjects. Notably, the sixteen specific subjects for which more than two-thirds of the journals use single-blind peer review are all in the three broad science-oriented disciplinary categories: materials science and engineering, life and biomedical sciences, and health sciences. In contrast, for journals in nearly all of the specific subjects categorized by SAGE as social sciences and humanities, more than 85 percent use a double-blind review process.

The general pattern for the type of peer review used by Wiley journals for the fifteen specific subjects displayed in table 3 is similar to the pattern for SAGE journals. Although Wiley does not classify specific subjects into only one or two of the four broad disciplinary categories, the journals in specific subjects in the sciences, particularly the physical sciences, are most likely to use single-blind review. For example, more than 98 percent of the journals in chemistry use single-blind review. The only exception in the sciences is the specific subject of nursing, dentistry, and healthcare, for which two-thirds of the journals use double-blind review. In addition, journals in specific subjects in the social sciences are the most likely to use double-blind review. In fact, nearly all of the specific subjects for which a majority of journals use double-blind review are in the social sciences and humanities with more than 90 percent of journals in the social and behavioral sciences using double-blind review.

The general pattern for Elsevier journals, as table 4 shows, is quite similar to that of the SAGE and Wiley journals. Nearly 80 percent or more of Elsevier journals in specific subjects listed under physical sciences and engineering as well as the life sciences use single-blind review, whereas Elsevier journals in specific subjects listed in the social sciences and humanities category are more likely to use double-blind review. For example, more than 90 percent of journals in earth and planetary science as well as in chemistry use single-blind review. In contrast, about 60 percent of journals in the social sciences as well as in the arts and humanities use double-blind review.

So What?

Armed with the findings presented here, readers now know that journals in other disciplines may use a type of peer review quite different from the journals with which they have experience. Two very different broad questions emerge from this. First, what might explain the patterns that emerged from the study? And second, how might the knowledge of such patterns have an impact on future discussions and debates about peer review? Although it is tempting to move immediately to the second question, insights about the answer to the first question may also provide additional context for the second question.

Explaining the Patterns of Peer Review

Adam Etkin, Thomas Gaston, and Jason Roberts, in their 2017 book, Peer Review: Reform and Renewal in Scientific Publishing, explain that before the mid-seventeenth century, scholars shared their work to solicit feedback, but this practice was not peer review as we think of it today. By the early 1800s, the process of refereeing manuscripts for some learned societies and their associated journals shifted from in-house reviewers to external reviewers. Over time, peer review evolved into its current state, though the findings described above reveal that it is certainly not a uniform process across academic disciplines.

How might one explain the disciplinary variations in peer review? One answer might lie in characteristics of the disciplines themselves. Anthony Biglan, in his 1973 Journal of Applied Psychology article, “Relationships between Subject Matter Characteristics and the Structure and Output of University Departments,” identifies three particular dimensions on which subject matter varies across disciplines: (1) “hard-soft,” with the sciences at one end, humanities at the other end, and social sciences between them; (2) “pure-applied,” the extent to which a discipline is focused on real-world applications; and (3) “life systems/non-life systems,” the extent to which a discipline focuses on organic or inorganic objects.

The “hard-soft” dimension, particularly insofar as it parallels the quantitative-qualitative continuum, may be an excellent starting point. Michèle Lamont, in her 2009 book, How Professors Think: Inside the Curious World of Academic Judgment, describes how the judgment of excellence in evaluating fellowship and grant applications varies among more quantitative and more qualitative disciplines. Lamont found, for example, that economists focused on an applicant’s use of mathematical tools and that evaluators in economics, political science, and sociology were likely to focus on empiricism whereas those in English literature took a more relativistic approach, and humanists, more generally, thought of interpretative skills as a measure of excellence.

Biglan’s “hard-soft” dimension, taken in tandem with his other two dimensions, helps explain the patterns in peer review by providing a clear differentiation among disciplines. Admittedly, Biglan did not study nor attempt to classify all disciplines. Consequently, his four-category classification of “hard” disciplines includes only those disciplines he used in his analysis.

- pure, non-life systems (astronomy, chemistry, geology, mathematics, and physics)

- pure, life systems (botany, entomology, microbiology, physiology, and zoology)

- applied, non-life systems (ceramic engineering, civil engineering, mechanical engineering, and computer science)

- applied, life systems (agronomy, dairy science, horticulture, and agricultural economics)

Similarly, his four-category classification of “soft” disciplines includes only those disciplines he used in his analysis.

- pure, non-life systems (English, German, history, philosophy, Russian, and communications)

- pure, life systems (anthropology, political science, psychology, and sociology)

- applied, non-life systems (accounting, finance, and economics)

- applied, life systems (education-related fields)

Journals in the broad disciplinary category of materials science/physical sciences and engineering as well as those in life sciences/life and biomedical sciences predominantly used single-blind peer review, and they fit closely with three of Biglan’s four “hard” categories—the pure/non-life systems and pure/life systems categories as well the applied/non-life systems category. Journals in health/health sciences were much less likely to be predominantly single-blind and would be found in Biglan’s remaining “hard” category, applied/life systems. Moreover, the results of SAGE specific subjects in the broad disciplinary category of health sciences may map onto the “pure-applied” dimension—that is, more than two-thirds of journals in nursing, public health, and allied health, which are “applied,” use double-blind review, whereas more than two-thirds of the journals in most of the other more “pure” medical specialties use single-blind review, including, for example, more than 80 percent of journals in otolaryngology and oncology. Biglan’s dimensions may also help explain some differences among Elsevier journals in psychology. For example, more than 85 percent of journals in neuropsychology and physiological psychology, a subject close to the life sciences, use single-blind review, whereas about 70 percent of journals in social psychology use double-blind review, perhaps a reflection of that subfield in psychology being more of a “soft” science focused on life systems. Biglan’s “soft” pure/non-life systems disciplines were predominantly those in the humanities, which aligns closely with disciplines that predominantly use double-blind review.

Despite the alignment of broad disciplinary categories and specific subjects with some of Biglan’s categories, his framework was not designed to explain why journals in disciplines considered “hard” would be more likely to use single-blind review or why those in disciplines considered “soft” would be more likely to use double-blind review. His framework also does not explain why the type of peer review for applied “hard” disciplines differed from that of other “hard” disciplines whereas being applied had little impact on the type of peer review for “soft” disciplines. Other dimensions may also be important to consider, including the extent to which journals in the broad disciplinary categories or specific subjects focus on quantitative or nonquantitative aspects of a discipline or whether research in a field is considered empirical rather than relativistic or interpretative. Some may characterize scholarly work in the sciences as more objective and characterize scholarly work in the humanities as more subjective. Moreover, the social sciences and humanities are more likely than the sciences to explicitly examine the impact of gender and race, for example, and consequently may have a preference for a double-blind peer-review process, which could be viewed as less subject to bias because the characteristics of the authors are not known to reviewers.

The Impact on Debates about Peer Review

I will not try here to convince readers that one type of peer review is better than another. My main goal is to inform those across the academy about some stark differences by broad disciplinary categories and specific subjects in the type of peer-review process used by journals. At the same time, the desired outcome of any peer review process is the acceptance of manuscripts that meet the standards of a given discipline and are of sufficient quality and importance to warrant publication. Since the use of single-blind and double-blind review was the focus of my empirical study, let’s start with what advocates and skeptics have to say about these two types of peer review.

Well-known sociologist Robert K. Merton explains in his classic 1942 essay, “The Normative Structure of Science,” that the evaluation of scholarly work should be based on universalism and “is not to depend on the personal or social attributes of the protagonist; their race, nationality, religion, class, and personal qualities are as such irrelevant. Objectivity precludes particularism.” In other words, he believed that submitted manuscripts should be judged on their scholarly merit and not on the attributes or qualities of authors.

Some proponents of single-blind peer review believe that reviewers’ knowledge of authors’ identities is not a problem because it has no bearing on their evaluations of manuscripts, and others believe that knowing authors’ identities provides a useful context in which to evaluate manuscripts, since it allows reviewers to place manuscripts in the context of authors’ past work. On a more pragmatic level, proponents of single-blind review point out that authors’ highly specialized research as well as their online postings of working papers, conference papers, and clinical-trial methodologies often makes them easily identifiable. Therefore, advocates believe that it is advantageous to have authors’ identities revealed to reviewers.

Proponents of double-blind peer review argue that it allows reviewers to judge a manuscript on its own merits and removes any conscious or unconscious bias related to the background and identity of authors, including gender, reputation, prestige of home institution, and nationality. Although these personal, professional, and institutional characteristics could potentially introduce bias if they are revealed, Emily A. Largent and Richard T. Snodgrass, in their 2016 article, “Blind Peer Review by Academic Journals,” argue that the research on the actual impact of such characteristics on peer reviews and decisions about publication is often inconclusive, including mixed results within studies and contradictory findings across studies. In this regard, the perception that such biases have been removed may matter mostly because it creates trust in the process.

For all types of blinded reviews, reviewers’ identities are masked (unless reviewers are given a choice and unmask) to enable them to provide the most candid assessments of manuscripts, though this masking could also lead to incomplete or unnecessarily harsh reviews. Of course, editors, who are responsible for determining which manuscripts are sent out for review, for choosing reviewers, and for deciding what will be published, are fully aware of both authors’ and reviewers’ identities. (The selection of editors and reviewers would be an additional facet of peer review worthy of study.)

A small number of journals use triple-blind review, for which authors’ identities are also withheld from editors, or quadruple-blind review, for which the handling editor’s identity is additionally hidden from the author. In contrast, for open peer review, all identities are known, and open peer review can include publishing the reviews along with accepted manuscripts. Advocates believe that open peer review provides the most transparency and potentially the most accountability; however, overly positive reviews or animosity toward reviewers could also result from this approach.

Conclusion

My hope is that the results described above aid in ongoing discussions about the merits of and best practices in peer review. At the very least, academics in different disciplines should be aware of patterned differences in types of peer review and check with colleagues about what process they have in mind when conversations and debates take place.

Such disciplinary differences have a bearing on many important questions about peer-review practices. How do we ensure that the peer-review process is fair? How do we define and measure fairness? What is the relationship between equity and fairness? How do we ensure that the peer-review process is perceived as legitimate? How do we best avoid biases in the peer-review process, regardless of whether those biases are intended or not? Are the peer-review practices of journals in different disciplines still yielding the publication of high-quality, important work? If determining the importance of a scholar’s work is a more difficult task than evaluating the methods of research used, what implications does that have for the present and future shape of peer review in different disciplines?

Understanding the disciplinary patterns in the use of single- and double-blind peer review as well as their possible underpinnings should allow scholars to develop more informed opinions about which peer-review processes are most likely to identify high-quality, important work for journals, book publishers, and granting agencies. Although no peer review process will be perfect, such understanding should also provide scholars from all disciplines with the potential to be more thoughtful about the shape peer review should take in the future.

Carol J. Auster is professor of sociology emerita at Franklin and Marshall College. She previously served as second vice president of the national AAUP, as a Council member, and as a member of the AAUP’s Committee on Professional Ethics. Auster has written peer-reviewed journal articles about Disney toys, Mother’s and Father’s Day cards, women ice-hockey players, and pet cemeteries, among other topics. The author can be contacted at [email protected] for additional information about the data-collection process.

Photo by Canva Pro.